Stefano Zacchiroli: je code

je.code(); promoting programming (in French)

jecode.org is

a nice initiative by, among others, my fellow Debian developer and

university professor Martin Quinson. The goal of jecode.org is to

raise awareness about the importance of learning the basics

of programming, for everyone in modern societies.

jecode.org targets specifically francophone children (hence the

name, for "I code").

I've been happy to contribute to the initiative with my thoughts on

why learning to program is so important today, joining the happy

bunch of "codeurs" on the

web site. If you read French, you can find them reposted below. If

you also write French, you might want to contribute

your thoughts on the matter. How? By forking the project of

course!

Pourquoi codes-tu ? Tout d'abord, je code parce que c'est une activit passionnante, dr le, et qui permet de prouver le plaisir de cr er. Deuxi mement, je code pour automatiser les taches r p titives qui peuvent rendre p nibles nos vies num riques. Un ordinateur est con u exactement pour cela: lib rer les tres humains des taches stupides, pour leur permettre de se concentrer sur les taches qui ont besoin de l'intelligence humaine pour tre r solues. Mais je code aussi pour le pur plaisir du hacking, i.e., trouver des utilisations originelles et inattendues pour des logiciels existants. Comment as-tu appris ? Compl tement au hasard, quand j' tais gamin. 7 ou 8 ans, je suis tomb dans la biblioth que municipale de mon petit village, sur un livre qui enseignait programmer en BASIC travers la m taphore du jeu de l'oie. partir de ce jour j'ai utilis le Commodore 64 de mon p re beaucoup plus pour programmer que pour les jeux vid o: coder est tellement plus dr le! Plus tard, au lyc e, j'ai pu appr cier la programmation structur e et les avantages normes qu'elle apporte par rapport aux GO TO du BASIC et je suis devenu un accro du Pascal. Le reste est venu avec l'universit et la d couverte du Logiciel Libre: la caverne d'Ali Baba du codeur curieux. Quel est ton langage pr f r ? J'ai plusieurs langages pr f r s. J'aime Python pour son minimalisme syntactique, sa communaut vaste et bien organis e, et pour l'abondance des outils et ressources dont il dispose. J'utilise Python pour le d veloppement d'infrastructures (souvent quip es d'interfaces Web) de taille moyenne/grande, surtout si j'ai envie des cr er une communaut de contributeurs autour du logiciel. J'aime OCaml pour son syst me de types et sa capacit de capturer les bonnes propri t s des applications complexes. Cela permet au compilateur d'aider norm ment les d veloppeur viter des erreurs de codage comme de conception. J'utilise aussi beaucoup Perl et le shell script (principalement Bash) pour l'automatisation des taches: la capacit de ces langages de connecter d'autres applications est encore in gal e. Pourquoi chacun devrait-il apprendre programmer ou tre initi ? On est de plus en plus d pendants des logiciels. Quand on utilise une lave-vaisselle, on conduit une voiture, on est soign dans un h pital, quand on communique sur un r seau social, ou on surfe le Web, nos activit s sont constamment ex cut es par des logiciels. Celui qui contr le ces logiciels contr le nos vies. Comme citoyens d'un monde qui est de plus en plus num rique, pour ne pas devenir des esclaves 2.0, nous devons pr tendre le contr le sur le logiciel qui nous entoure. Pour y parvenir, le Logiciel Libre---qui nous permet d'utiliser, tudier, modifier, reproduire le logiciel sans restrictions---est un ingr dient indispensable. Aussi bien qu'une vaste diffusion des comp tences en programmation: chaque bit de connaissance dans ce domaine nous rende tous plus libres.

Pourquoi codes-tu ? Tout d'abord, je code parce que c'est une activit passionnante, dr le, et qui permet de prouver le plaisir de cr er. Deuxi mement, je code pour automatiser les taches r p titives qui peuvent rendre p nibles nos vies num riques. Un ordinateur est con u exactement pour cela: lib rer les tres humains des taches stupides, pour leur permettre de se concentrer sur les taches qui ont besoin de l'intelligence humaine pour tre r solues. Mais je code aussi pour le pur plaisir du hacking, i.e., trouver des utilisations originelles et inattendues pour des logiciels existants. Comment as-tu appris ? Compl tement au hasard, quand j' tais gamin. 7 ou 8 ans, je suis tomb dans la biblioth que municipale de mon petit village, sur un livre qui enseignait programmer en BASIC travers la m taphore du jeu de l'oie. partir de ce jour j'ai utilis le Commodore 64 de mon p re beaucoup plus pour programmer que pour les jeux vid o: coder est tellement plus dr le! Plus tard, au lyc e, j'ai pu appr cier la programmation structur e et les avantages normes qu'elle apporte par rapport aux GO TO du BASIC et je suis devenu un accro du Pascal. Le reste est venu avec l'universit et la d couverte du Logiciel Libre: la caverne d'Ali Baba du codeur curieux. Quel est ton langage pr f r ? J'ai plusieurs langages pr f r s. J'aime Python pour son minimalisme syntactique, sa communaut vaste et bien organis e, et pour l'abondance des outils et ressources dont il dispose. J'utilise Python pour le d veloppement d'infrastructures (souvent quip es d'interfaces Web) de taille moyenne/grande, surtout si j'ai envie des cr er une communaut de contributeurs autour du logiciel. J'aime OCaml pour son syst me de types et sa capacit de capturer les bonnes propri t s des applications complexes. Cela permet au compilateur d'aider norm ment les d veloppeur viter des erreurs de codage comme de conception. J'utilise aussi beaucoup Perl et le shell script (principalement Bash) pour l'automatisation des taches: la capacit de ces langages de connecter d'autres applications est encore in gal e. Pourquoi chacun devrait-il apprendre programmer ou tre initi ? On est de plus en plus d pendants des logiciels. Quand on utilise une lave-vaisselle, on conduit une voiture, on est soign dans un h pital, quand on communique sur un r seau social, ou on surfe le Web, nos activit s sont constamment ex cut es par des logiciels. Celui qui contr le ces logiciels contr le nos vies. Comme citoyens d'un monde qui est de plus en plus num rique, pour ne pas devenir des esclaves 2.0, nous devons pr tendre le contr le sur le logiciel qui nous entoure. Pour y parvenir, le Logiciel Libre---qui nous permet d'utiliser, tudier, modifier, reproduire le logiciel sans restrictions---est un ingr dient indispensable. Aussi bien qu'une vaste diffusion des comp tences en programmation: chaque bit de connaissance dans ce domaine nous rende tous plus libres.

I am happy to announce the release of OASIS v0.4.3.

I am happy to announce the release of OASIS v0.4.3.

OASIS is a tool to help OCaml developers to integrate configure, build and install systems in their projects. It should help to create standard entry points in the source code build system, allowing external tools to analyse projects easily.

This tool is freely inspired by Cabal which is the same kind of tool for Haskell.

You can find the new release

OASIS is a tool to help OCaml developers to integrate configure, build and install systems in their projects. It should help to create standard entry points in the source code build system, allowing external tools to analyse projects easily.

This tool is freely inspired by Cabal which is the same kind of tool for Haskell.

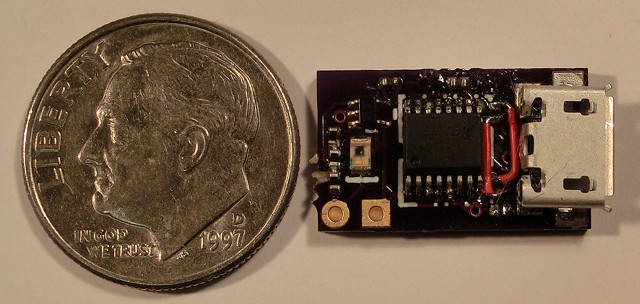

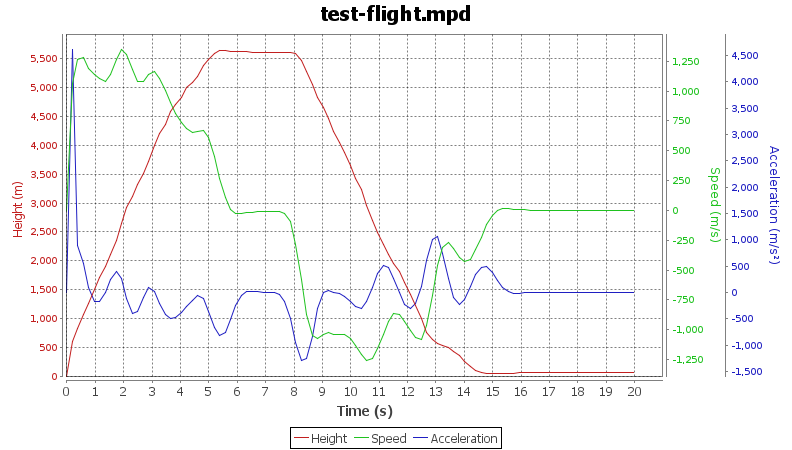

You can find the new release  In April of 2012 I bought a

In April of 2012 I bought a  MicroPeak Serial Interface Flight Logging for MicroPeak

MicroPeak Serial Interface Flight Logging for MicroPeak

I feel a sense of pride when I think that I was involved in the development and maintenance of what was probably the first piece of software accepted into Debian which then had and still has direct up-stream support from Microsoft. The world is a better place for having Microsoft in it. The first operating system I ever ran on an

I feel a sense of pride when I think that I was involved in the development and maintenance of what was probably the first piece of software accepted into Debian which then had and still has direct up-stream support from Microsoft. The world is a better place for having Microsoft in it. The first operating system I ever ran on an  There are

There are  This is both a release announcement for the next installment of The

MirBSD Korn Shell,

This is both a release announcement for the next installment of The

MirBSD Korn Shell,  (This is part of a series of posts on

(This is part of a series of posts on  After recent switch of this blog to Django, I've also planned to switch rest of my website to use same engine. Most of the code is already written, however I've found some forgotten parts, which nobody used for really a long time.

As most of the things there is free software, I don't like idea removing it completely, however it is quite unlikely that somebody will want to use these ancient things. Especially when it quite lacks documentation and I really forgot about that (most of them being Turbo Pascal things for DOS, Delphi components for Windows and so on). Anyway it will probably live only on

After recent switch of this blog to Django, I've also planned to switch rest of my website to use same engine. Most of the code is already written, however I've found some forgotten parts, which nobody used for really a long time.

As most of the things there is free software, I don't like idea removing it completely, however it is quite unlikely that somebody will want to use these ancient things. Especially when it quite lacks documentation and I really forgot about that (most of them being Turbo Pascal things for DOS, Delphi components for Windows and so on). Anyway it will probably live only on  I'm a terrible hoarder. I hang onto old stuff because I think it might be

fun to have a look at again later, when I've got nothing to do. The problem

is, I never have nothing to do, or when I do, I never think to go through

the stuff I've hoarded. As time goes by, the technology becomes more and

more obsolete to the point where it becomes impractical to look at it.

Today's example: the 3.5" floppy disk. I've got a disk holder thingy with

floppies in it dating back to the mid-nineties and earlier. Stuff from high

school, which I thought might be a good for a giggle to look at again some

time.

In the spirit of

I'm a terrible hoarder. I hang onto old stuff because I think it might be

fun to have a look at again later, when I've got nothing to do. The problem

is, I never have nothing to do, or when I do, I never think to go through

the stuff I've hoarded. As time goes by, the technology becomes more and

more obsolete to the point where it becomes impractical to look at it.

Today's example: the 3.5" floppy disk. I've got a disk holder thingy with

floppies in it dating back to the mid-nineties and earlier. Stuff from high

school, which I thought might be a good for a giggle to look at again some

time.

In the spirit of  I'm working on some ideas for finance or news software that deliberately

updates infrequently, so it doesn't reward me for checking or reloading it

constantly. I came up with the name "microhertz" to describe the idea. (1

microhertz once every eleven and a half days.)

As usual when I think of a project name, I did some DNS searches.

Unfortunately "microhertz.com" is not available (but "microhertz.org" is).

Then I went off on a tangent and got curious about which other SI units are

available as domain names.

This was the perfect opportunity to try

I'm working on some ideas for finance or news software that deliberately

updates infrequently, so it doesn't reward me for checking or reloading it

constantly. I came up with the name "microhertz" to describe the idea. (1

microhertz once every eleven and a half days.)

As usual when I think of a project name, I did some DNS searches.

Unfortunately "microhertz.com" is not available (but "microhertz.org" is).

Then I went off on a tangent and got curious about which other SI units are

available as domain names.

This was the perfect opportunity to try  A couple of weeks ago I updated my Debian Sid setup on the MacBook to

use disk encryption; this post is to document what I did for later reference.

The system was configured for dual booting Debian or Mac OS X using

A couple of weeks ago I updated my Debian Sid setup on the MacBook to

use disk encryption; this post is to document what I did for later reference.

The system was configured for dual booting Debian or Mac OS X using